Introduction to Dynamic Programming

This is a quick 5 minute easy-to-read programme, for even the least experienced programmers.

So you wanna see c some new programming, or some new epic pro-gamer moves to up your competitive programming skills.

Well, look no further with this CPMsoc blog on dynamic programming!

Well, the obvious question is what is dynamic programming?

Dynamic programming is programming that is dynamic.

A quick google search would reveal (or at least according to Programiz), " technique in computer programming that helps to efficiently solve a class of problems that have overlapping subproblems and optimal substructure property."

What does this actually mean though?

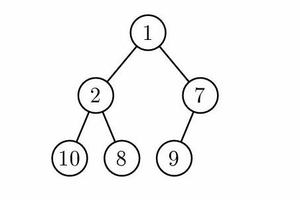

The paramount idea regarding dynamic programming circulates around the idea of sub-programs; essentially taking a large problem and breaking it down into smaller problems. Then, the values of the smaller-problems are saved to be used later to solve the initial, larger problem.

Accordingly, the key benefit of dynamic programming in comparison to other modes of problem-solving, is by saving the result, is has the ability to optimize the solution by decreasing the repetition, thus increasing the overall-run time.

But before, we uncover more into dynamic programming, let's review recursion and memoization.

RECURSION

Welp, we find ourselves asking ourselves, what is meant by recursion.

Once again, a quick, frantic google search uncovers that recursion can be defined as "the determination of a succession of elements(such as numbers or functions) by operation on one or more preceding elements according to a rule or formula involving a finite number of steps".

We can reconceptualise recursion with the aid of an example.

Let’s imagine that we need to calculate how many stairs are in total for the UNSW Stair of Dooms

Main Walkway. But since our programming overlords directors are busy the task falls on me. Now, due to let’s say my very limited mathematics skills and oversight fail in MATH1131, I lack the ability to count them incrementally.

So, collectively, the members of CPMSoc came up with a solution.

Starting with me standing at the top of the staircase, on each step below me stands one CPMSoc member. So I ask the person in front of me how many steps there are, knowing that if the person in front of me says there is an "x" amount of stairs, there is in total and "x+1" amount of stairs. This is a subproblem; I solve for "x + 1" amount of steps, instead of the initial total. Then, the person in front of me asks the same question who asks the person in front, and this goes on and on, until there is no one in front to ask. Once, we reach the bottom of the staircase, where no one is standing, that is the base-case, of zero individuals standing

Now, we know that eventually, as each, person asks the person in-front, we will reach the bottom where no person is standing. This principle is referred to as "bottoming-out" in the context of recursion programming; a condition (in our case, the fact that at the bottom of the staircase there are ZERO people), that stops further calculation.

Below, enclosed are fragments of code that respectively use iteration and recursion.

Recursion vs Iteration

MEMOIZATION

DYNAMIC PROGRAMMING

That's all from us, folks!